Some times ago, I did an article on this topic but regarding Google’s reCAPTCHAv2 you can check it here.

I had a few request to do it for reCAPTCHAv3, so here we go.

The main difference between v2 and v3, it’s that v3 doesn’t interrupt users and it runs adaptive risk analysis in order to make the difference between robots and humans.

Finally v3 will return a score from 0 to 1 (most secure) and you can choose the most appropriate for your website.

Tested on WordPress 5.9 with Twenty Seventeen theme

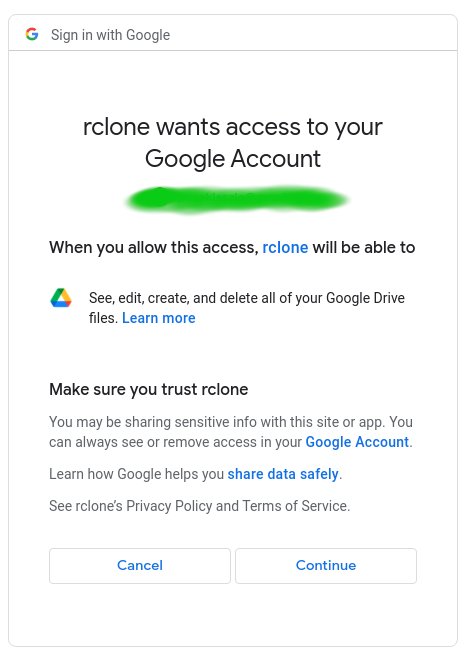

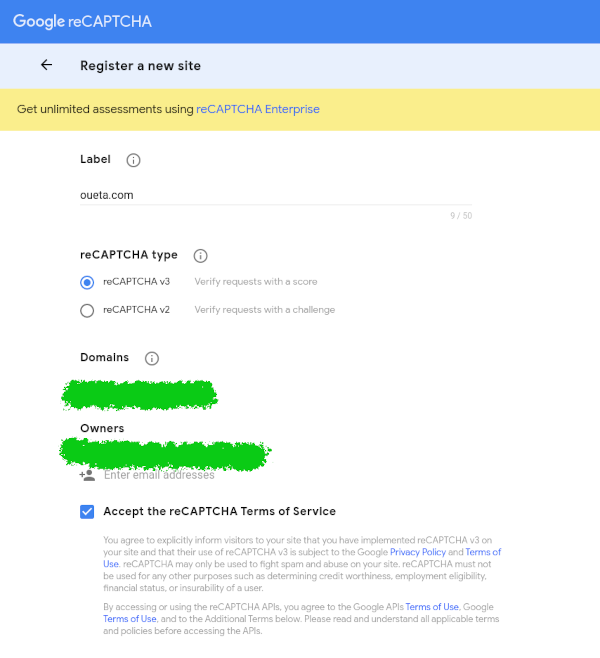

First step would be to register your site on Google

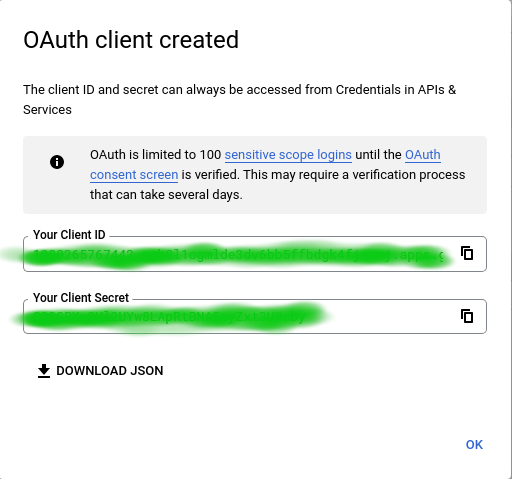

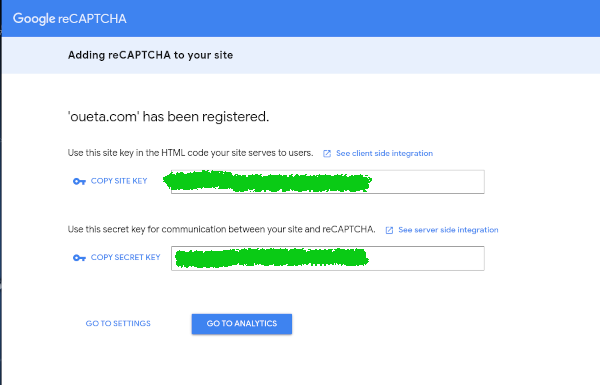

Once you registered, Google will provide you a site and secret key. We will use the site key in our front end code, while the secret will be used in the backend code.

Edit single.php from your theme folder (in my example /wp-content/themes/twentyseventeen) and add the following code before get_header(); and of course replace YOUR_SITE_KEY_HERE with your site key.

wp_enqueue_script('google-recaptcha', 'https://www.google.com/recaptcha/api.js?render=YOUR_SITE_KEY_HERE');

Edit functions.php from your theme folder (in my example /wp-content/themes/twentyseventeen) and add the following code and replace YOUR_SITE_KEY_HERE (line 15) and YOUR_SECRET_KEY_HERE (line 34)

/**

* Google recaptcha add before the submit button

*/

function add_google_recaptcha($submit_field) {

$submit_field['submit_field'] = '<input type="hidden" id="g-recaptcha-response" name="g-recaptcha-response">

<input type="hidden" name="action" value="validate_captcha">

<input name="buttonSubmit" type="submit" id="buttonSubmit" class="submit" value="Post Comment" />

<input type="hidden" name="comment_post_ID" value="'. get_the_id() . '" id="comment_post_ID" />

<input type="hidden" name="comment_parent" id="comment_parent" value="0" />

<script>

document.getElementById("buttonSubmit").onclick = function onClick(e) {

e.preventDefault();

grecaptcha.ready(function() {

grecaptcha.execute("YOUR_SITE_KEY_HERE", {action: "submit"}).then(function(token) {

document.getElementById("g-recaptcha-response").value = token;

document.getElementById("commentform").submit();

});

});

}

</script>';

return $submit_field;

}

if (!is_user_logged_in()) {

add_filter('comment_form_defaults','add_google_recaptcha');

}

/**

* Google recaptcha check, validate and catch the spammer

*/

function is_valid_captcha($captcha) {

$captcha_postdata = http_build_query(array(

'secret' => 'YOUR_SECRET_KEY_HERE',

'response' => $captcha,

'remoteip' => $_SERVER['REMOTE_ADDR']));

$captcha_opts = array('http' => array(

'method' => 'POST',

'header' => 'Content-type: application/x-www-form-urlencoded',

'content' => $captcha_postdata));

$captcha_context = stream_context_create($captcha_opts);

$captcha_response = json_decode(file_get_contents("https://www.google.com/recaptcha/api/siteverify" , false , $captcha_context), true);

if ($captcha_response['success'] && $captcha_response['score'] > 0.5)

return true;

else

return false;

}

function verify_google_recaptcha() {

$recaptcha = $_POST['g-recaptcha-response'];

if (empty($recaptcha))

wp_die( __("<b>ERROR:</b><b>Sorry, spam detected</b>"));

else if (!is_valid_captcha($recaptcha))

wp_die( __("<b>Sorry, spam detected!</b>"));

}

The users score needs to be bigger than 0.5 in order to be able to post comments, you can modify it on line 43. Google provides also a nice dashboard where you can check your users behavior.